Deep Learning-based Downsampling and Semantic Segmentation of LiDAR Data for Urban Localization of Autonomous Vehicles

© GIH

© GIH

| Led by: | Marvin Scherff, Rozhin Moftizadeh, Hamza Alkhatib, Marius Lindauer |

| Team: | Yaxi Wang |

| Year: | 2024 |

| Duration: | 05/2024 – 11/2024 |

Background

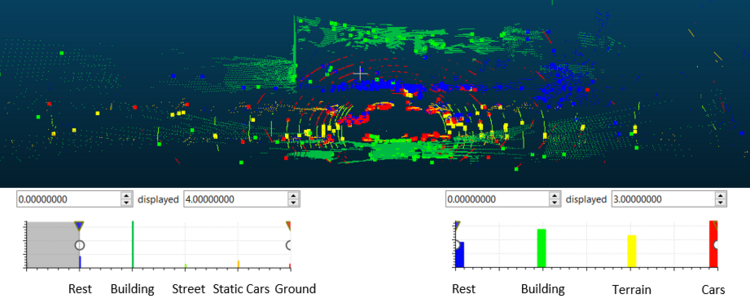

Urban environments present unique challenges for autonomous vehicle navigation, where the precision offered by Global Navigation Satellite Systems (GNSS) like GPS is insufficient. Dense urban landscapes can disrupt satellite signals, causing inaccurate positioning especially in narrow streets with limited sky exposure. In contrast, LiDAR technology ensures reliable localization by continuously scanning the environment to identify and align distinct features with existing map data or across scans. Despite LiDAR's advantages in generating detailed 3D representations of surroundings, the voluminous data produced poses significant computational and real-time processing challenges. A method that efficiently downsamples and segments LiDAR data is crucial for facilitating real-time, filter-based vehicle localization with enhanced scene comprehension.

Objective

This thesis explores the impact of simultaneously downsampling and segmenting LiDAR point clouds using Deep Learning on vehicle localization, aiming to streamline the traditional two-step process into a singular, optimized model framework. This research endeavors to ensure broad applicability by testing on a variety of LiDAR systems through both public and proprietary datasets.

Approach

This work will refine existing advanced models for a novel framework that concurrently downsamples and segments urban LiDAR point clouds. Inspired by techniques like SampleNet and ASPNet, which offer differentiable relaxations for downsampling that maintain data fidelity for deep learning tasks, the study will also incorporate diverse, efficient segmentation networks suitable for projection-, voxel-, or point-based inputs. These model predictions will be integrated into the localization filter to evaluate their effectiveness, guiding adjustments towards the final architecture, training procedure, and hyperparameters beyond traditional Gridsearch optimization.

Provided support

To work on the topic itself possible datasets for model training, a script that convert DL prediction to necessary LiDAR format within the filter and the localization framework implemented in Matlab (including corresponding map and additional measurement data) are shared. Moreover, the student will be provided with papers about above mentioned approaches and additional material outlining the filter methodology.

Recommended pre-requisites:

- Essential: Python, Pytorch, Ability to adapt existing code bases.

- Good-to-know: Matlab.